Associated Principles

Most of the following content can be found in presentation format: Associated Movements and Principles (slides 89-161). Please note this is the 2024 version of the course and will not be kept updated.

FAIR Data Principles

https://www.go-fair.org/fair-principles/ (Wilkinson et al., 2016)

Findable

- F1. (Meta)data are assigned a globally unique and persistent identifier

- F2. Data are described with rich metadata (defined by R1)

- F3. Metadata clearly and explicitly include the identifier of the data they describe

- F4. (Meta)data are registered or indexed in a searchable resource

Accessible

- A1. (Meta)data are retrievable by their identifier using a standardised communications protocol

- A1.1 The protocol is open, free, and universally implementable

- A1.2 The protocol allows for an authentication and authorisation procedure, where necessary

- A2. Metadata are accessible, even when the data are no longer available

Interoperable

- I1. (Meta)data use a formal, accessible, shared, and broadly applicable language for knowledge representation.

- I2. (Meta)data use vocabularies that follow FAIR principles

- I3. (Meta)data include qualified references to other (meta)data

Reusable

- R1. (Meta)data are richly described with a plurality of accurate and relevant attributes

- R1.1. (Meta)data are released with a clear and accessible data usage license

- R1.2. (Meta)data are associated with detailed provenance

- R1.3. (Meta)data meet domain-relevant community standards

CARE Principles for Indigenous Data Governance

Carroll et al. (2020)

Collective Benefit

- C1. For inclusive development and innovation

- C2. For improved governance and citizen engagement

- C3. For equitable outcomes

Responsibility

- R1. For positive relationships

- R2. For expanding capability and capacity

- R3. For Indigenous languages and worldviews

Ethics

- E1. For minimizing harm and maximizing benefit

- E2. For justice

- E3. For future use

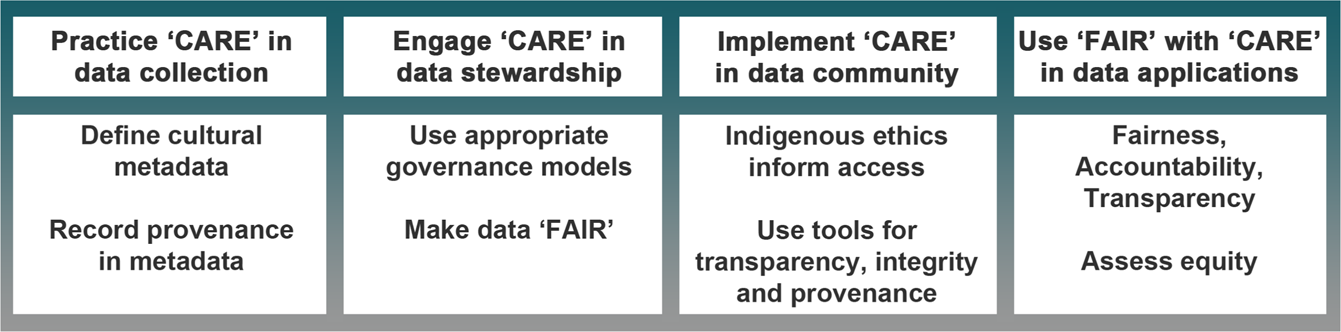

CARE and FAIR

Carroll et al. (2021)

Collections as Data

Summits

- 2017: Santa Barbara Statement (Padilla et al., 2017)

- 2023: Vancouver Statement (Padilla, Scates Kettler, Varner, et al., 2023)

Main outputs

- 10 principles

- ‘Part to Whole’ Report

- Related checklist and initiatives

10 Principles

- Collections as Data development aims to encourage computational use of digitised and born digital collections.

- Collections as Data stewards are guided by ongoing ethical commitments.

- Collections as Data stewards aim to lower barriers to use.

- Collections as Data designed for everyone serve no one.

- Shared documentation helps others find a path to doing the work.

- Collections as Data should be made openly accessible by default, except in cases where ethical or legal obligations preclude it.

- Collections as Data development values interoperability.

- Collections as Data stewards work transparently in order to develop trustworthy, long-lived collections.

- Data as well as the data that describe those data are considered in scope.

- The development of collections as data is an ongoing process and does not necessarily conclude with a final version.

Part to Whole

- Boundary Object Concept: Collections-as-data serve as flexible tools adaptable to various needs while maintaining a common identity (see Star & Griesemer, 1989).

- Ethical Considerations: Emphasis on ethical development and use of collections, especially concerning marginalized communities.

- Community Engagement: Essential for respecting and understanding the context of collections.

- Organisational Structure Support: Effective initiatives require collaboration across various organisational departments.

- Documentation Importance: Crucial for understanding and maintaining collections in the future.

- Community of Practice: Emphasises the need for skill sharing and collaborative environments.

- Future Challenges and Opportunities

- Integration of AI and computational tools in collections.

- Navigating the balance between global collaboration and local cultural sensitivities.

- Addressing financial and resource limitations for global community growth.

- Potential and risks of using collections as data for AI training.

Padilla, Scates Kettler, & Shorish (2023)

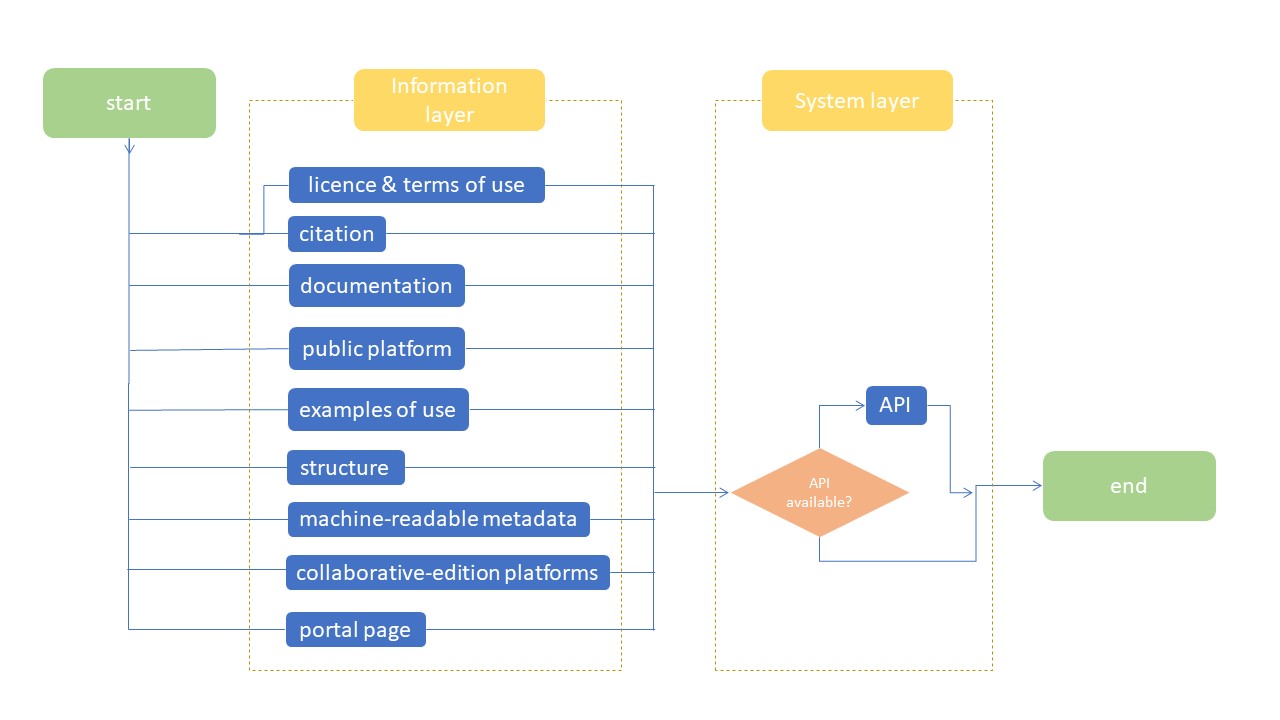

Checklist to publish Collections as Data in GLAM institutions

- Provide a clear license allowing reuse of the dataset without restrictions

- Provide a suggestion of how to cite your dataset

- Include documentation about the dataset

- Use a public platform to publish the dataset

- Share examples of use as additional documentation

- Give structure to the dataset

- Provide machine-readable metadata (about the dataset itself)

- Include your dataset in collaborative edition platforms

- Offer an API to access your repository

- Develop a portal page

- Add a terms of use

Candela et al. (2023)

Workflow

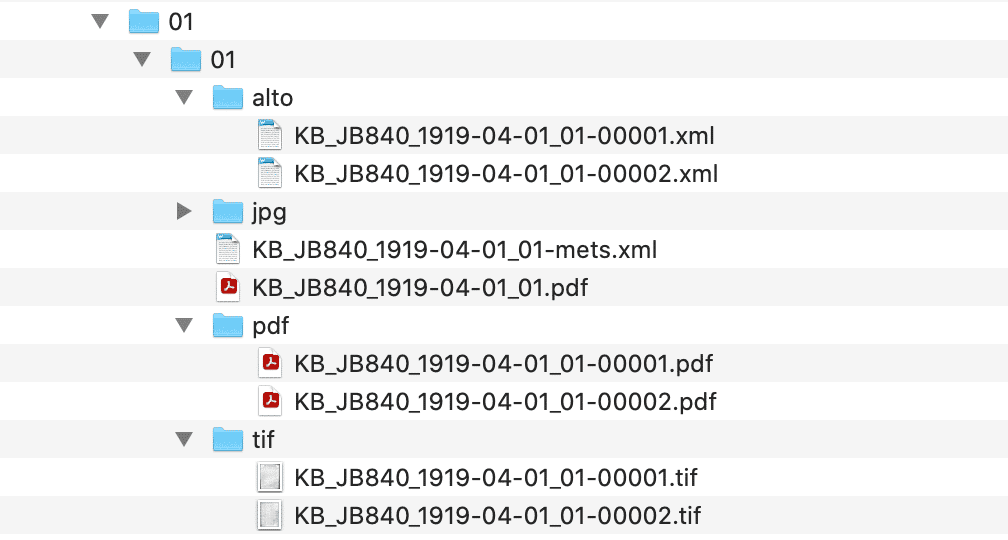

Implementation at the Royal Library of Belgium

- Data-level access to collections

- Digital Humanities Research

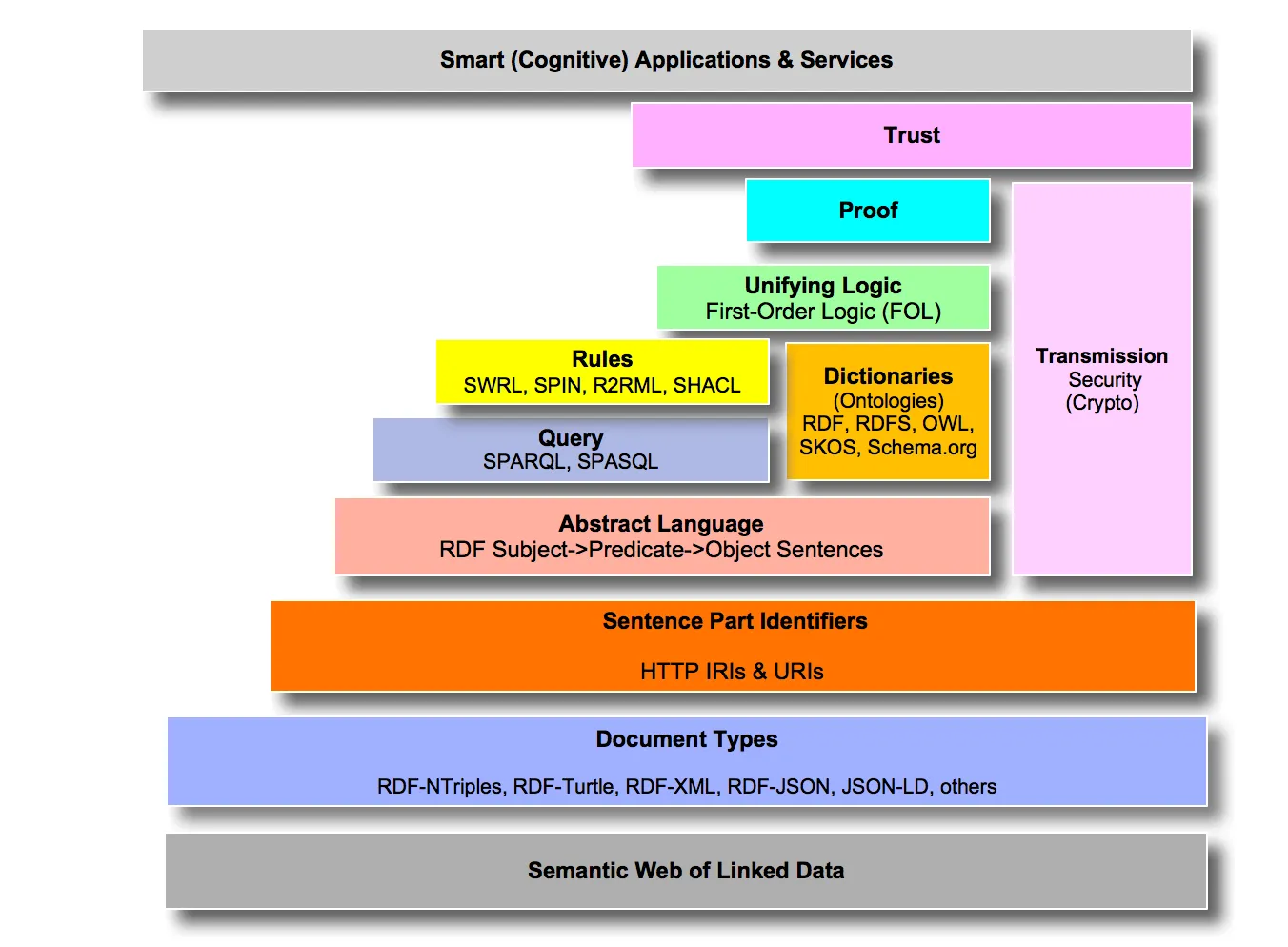

Linked (Open) (Usable) Data

An Open Vision of the Web

The [World Wide Web] project merges the techniques of information retrieval and hypertext to make an easy but powerful global information system. The project started with the philosophy that much academic information should be freely available to anyone.

Berners-Lee (1991)

Linked Data

Linked Data refers to a method of publishing structured data so that it can be interlinked and become more useful through semantic queries. It builds upon standard Web technologies such as HTTP, RDF, and URIs, but rather than using them to serve web pages for human readers, it extends them to share information in a way that can be read automatically by computers. This enables data from different sources to be connected and queried.

Linked Data Principles

- Use Uniform Resource Identifiers (URIs) as names for things

- Use HTTP URIs so that people can look up those names.

- When someone looks up a URI, provide useful information, using the standards (e.g. RDF, RDFS, SPARQL, etc.)

- Include links to other URIs so that they can discover more things.

Berners-Lee (2006)

Linked Open Data (LOD)

Linked Open Data is a subset of Linked Data that is open, meaning it is freely accessible and reusable by anyone. It adheres to the principles of being accessible under an open license, available in a machine-readable format, using open standards from the W3C (such as RDF and SPARQL), and linked to other datasets to increase its utility.

The Semantic Web or the Web of Data

The Semantic Web is an extension of the World Wide Web, through standards, to make it machine-readable.

Idehen (2017)

Resource Description Framework (RDF)

With RDF, everything goes in threes. Most of the triples’ components have Uniform Resource Identifiers (URIs).

Syntax: subject, predicate, object \((s \ \vec{p} \ o)\)

With RDF, everything goes in threes, the data model contains so-called triples: that is subject, predicate, object that form graphs. Most of the components of these triples use Uniform Resource Identifiers (URIs) and are generally web-addressable, whether for naming subjects and objects (which may themselves also be objects of other triples) or relationships

Linked Open Usable Data (LOUD)

The concept of LOUD extends LOD by emphasising not just the openness and interlinking of data but also its usability.

- The term was coined by Robert Sanderson (2018, 2019) who has been involved in the conception and maintenance of web standards, mainly in the cultural heritage field.

- LOUD’s goal is to achieve the Semantic Web’s intent on a global scale in a usable fashion by leveraging community-driven and JSON-LD-based specifications.

- It has five main design principles to make the data more easily accessible to software developers, who play a key role in interacting with the data and building software and services on top of it, and to some extent to academics.

LOUD Design Principles

- The right Abstraction for the audience

- Few Barriers to entry

- Comprehensible by introspection

- Documentation with working examples

- Few Exceptions, instead many consistent patterns

LOUD Standards/Communities

The IIIF and Linked Art communities are built on a synergy of social and technical integration, with a strong focus on usability. They are unified by shared expertise and leadership, fostering collaboration across technical boundaries. These communities prioritise inclusivity and diversity of participation, ensuring that a wide range of perspectives contribute to their work. Openness and friendliness are core values, along with a strong commitment to transparency in processes and decision-making. To facilitate engagement and knowledge sharing, they organise both online and face-to-face meetings to strengthen connections across the community.

(see Newbury, 2018; Raemy, 2023)

International Image Interoperability Framework (IIIF)

- A model for presenting and annotating content

- A global community that develops shared application programming interfaces (APIs), implements them in software, and exposes interoperable content

Snydman et al. (2015)

IIIF Community

- State and National Libraries: Bavarian State Library, French National Library (BnF), British Library, National Library of Estonia, New York Public Library, Vatican Library, Zentralbibliothek Zürich, etc.

- Archives: Blavatnik Foundation Archive, Indigenous Digital Archive, Internet Archive, Swedish National Archives, Swiss Federal Archives, etc.

- Museums & Galleries: Art Institute Chicago, J. Paul Getty Trust, Smithsonian, Victoria & Albert Museum, MIT Museum, National Gallery of Art, Van Gogh Worldwide, etc.

- Universities & Research Institutions: Cambridge, Cornell University, Ghent University, Swiss National Data and Service Center for the Humanities (DaSCH), Kyoto University, Oxford, Stanford, University of Toronto, Yale University, etc.

- Aggregators/Facilitators: Europeana, Cuba-IIIF, Cultural Japan, OCLC ContentDM, etc.

Practices

Raemy (2024)

Capabilities

Deep zoom with large images

Ōmi Kuni-ezu – 近江國絵圖 https://purl.stanford.edu/hs631zg4177

Compare images

Letter from Alexander Hamilton Papers (September 6, 1780), Library of Congress. https://prtd.app/#72f604db-6869-4c08-91ce-7c79502a7f35. IIIF Manifest: https://dvp.prtd.app/hamilton/manifest.json

Reunify

Manuscrit reconstitué : Châteauroux, Bibliothèque municipale, ms. 5 (Grandes Chroniques de France). https://demos.biblissima.fr/chateauroux/demo/

Search within

Franks, Kendal; Royal College of Surgeons of England. The Germ Theory. via Wellcome Library.

Storytelling

Storiiies. https://www.cogapp.com/r-d/storiiies

Crowdsource

Crowdsourcing initiative from the National Library of Wales

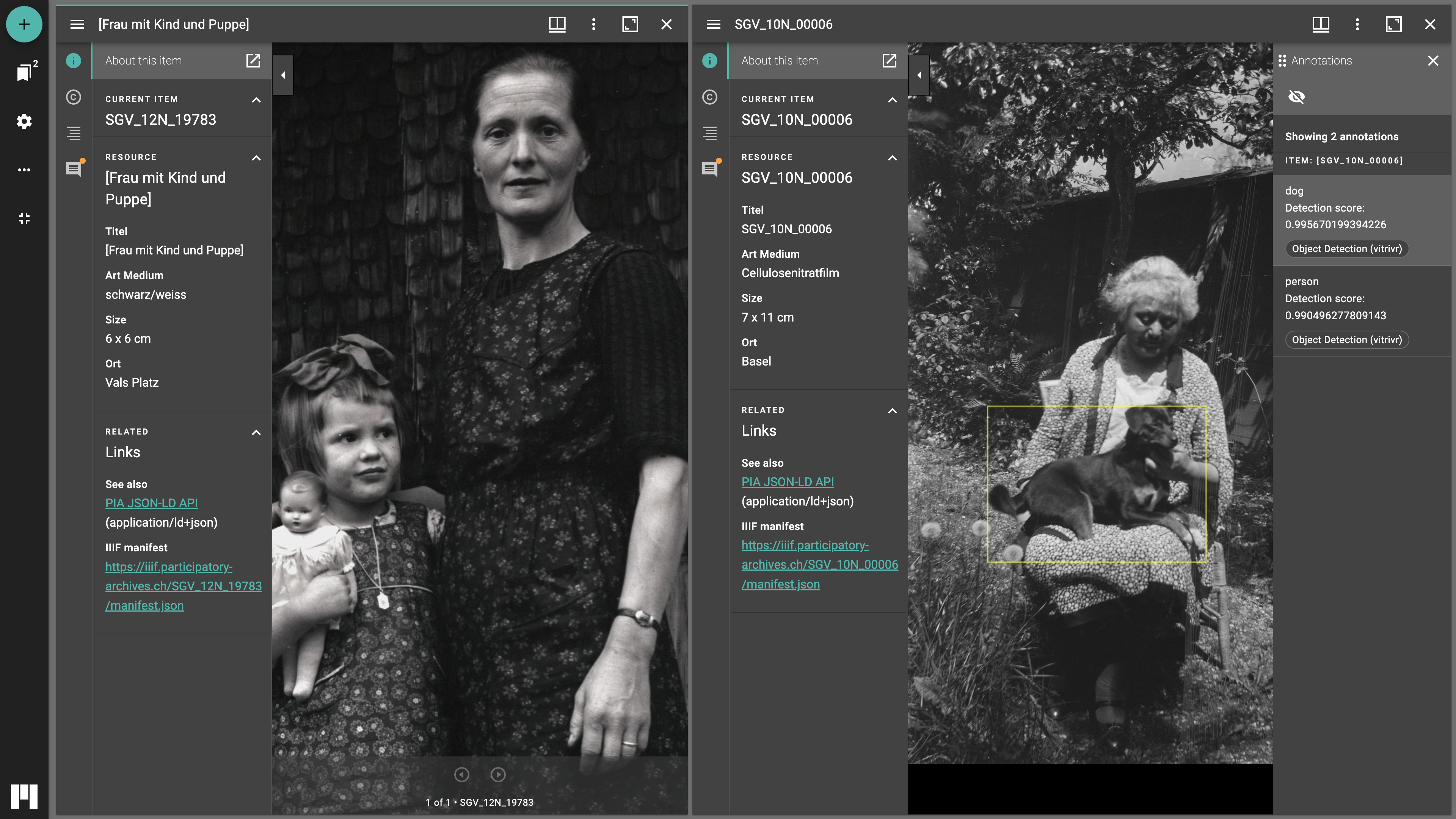

Machine-generated annotations

(see Cornut et al., 2023)

IIIF – Beyond Images

https://ddmal.ca/IIIF-AV-player/

Layers of digitisation

Leiden Collection’s Curtain Viewer. https://www.theleidencollection.com/viewer/david-and-uriah/

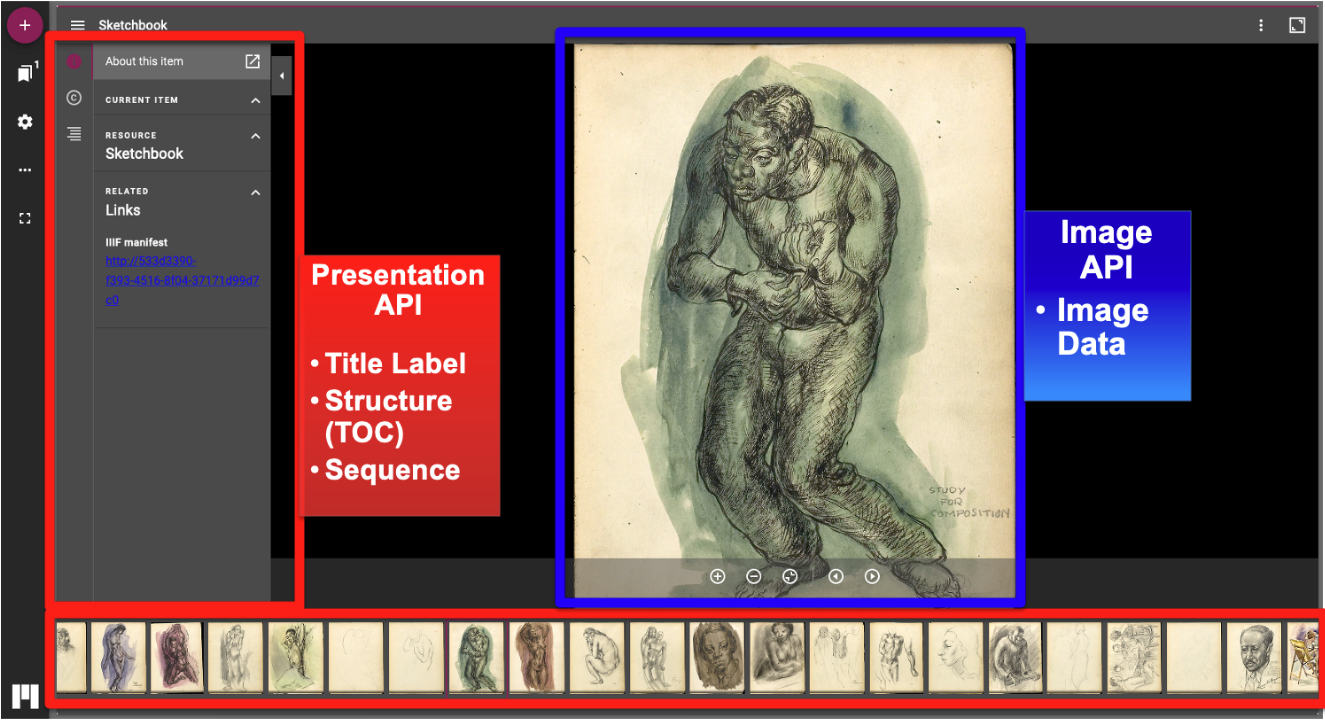

IIIF Specifications

| Application programming interface (API) | Description |

|---|---|

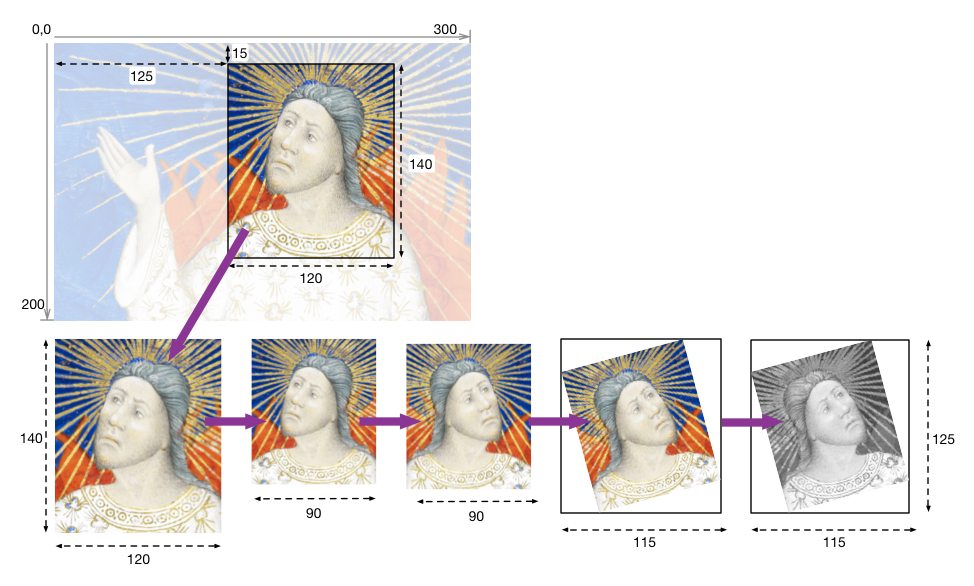

| Image API | The IIIF Image API specifies a web service that returns an image in response to a standard HTTP(S) request. The URI can specify the region, size, rotation, quality characteristics and format of the requested image. |

| Presentation API | The IIIF Presentation API provides the information necessary to allow a rich, online viewing environment for compound digital objects to be presented to a human user, often in conjunction with the IIIF Image API. |

| Authorization Flow API | The IIIF Authorization Flow specification describes a set of workflows for guiding the user through an existing access control system. |

| Change Discovery API | The IIIF Change Discovery API provides the information needed to discover and make use of IIIF resources. |

| Content Search API | The IIIF Content Search API specification lays out the interoperability mechanism for performing searches of text annotations associated with an object within the IIIF context. |

| Content State API | The IIIF Content State API provides a way to refer to a IIIF Presentation API resource, or a part of a resource, in a compact format that can be used to initialize the view of that resource in any client. |

The Image and Presentation APIs are referred to as the core IIIF APIs

IIIF Image API

| Type | Syntax | Example |

|---|---|---|

| Base URI | {scheme}://{server}{/prefix}/{identifier} |

https://iiif.dasch.swiss/0812/276uIbjSulF-k5RrtYZ3LUA.jpx |

| Image Request | {$BASE}/{region}/{size}/{rotation}/{quality}.{format} |

https://iiif.dasch.swiss/0812/276uIbjSulF-k5RrtYZ3LUA.jpx/full/1000,/0/default.jpg |

| Image Information (Metadata) | {$BASE}/info.json |

https://iiif.dasch.swiss/0812/276uIbjSulF-k5RrtYZ3LUA.jpx/info.json |

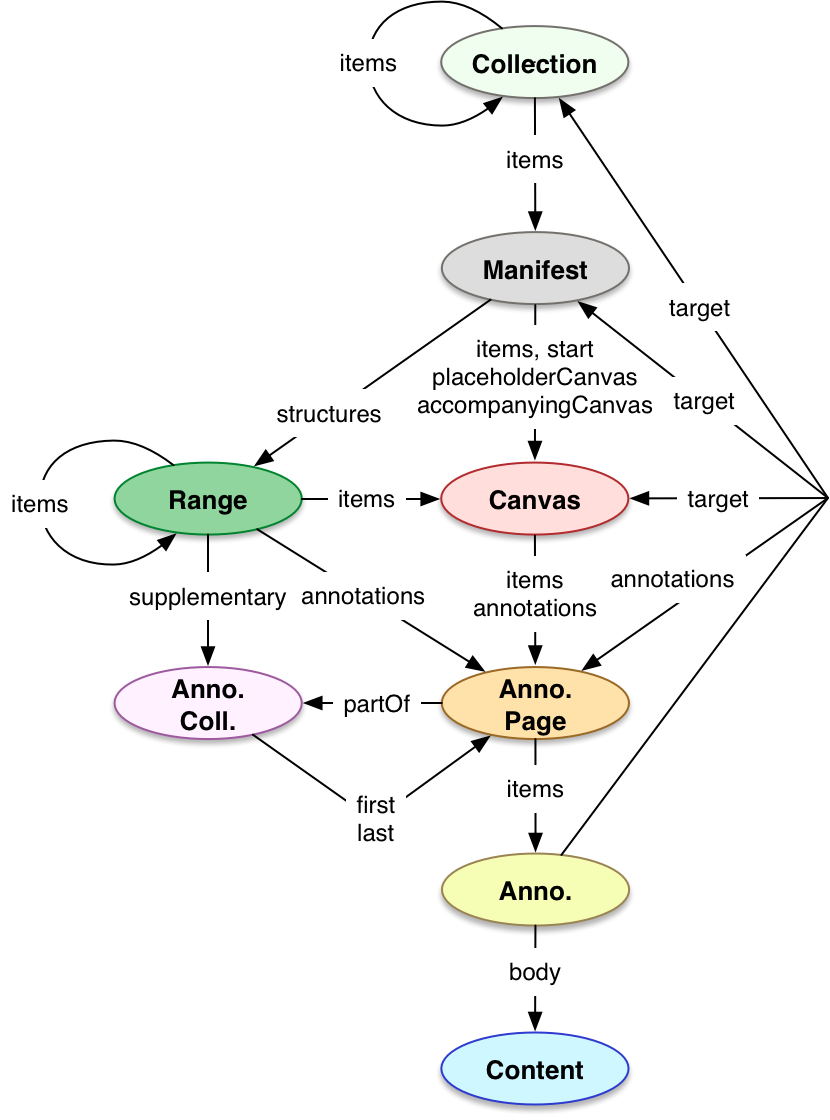

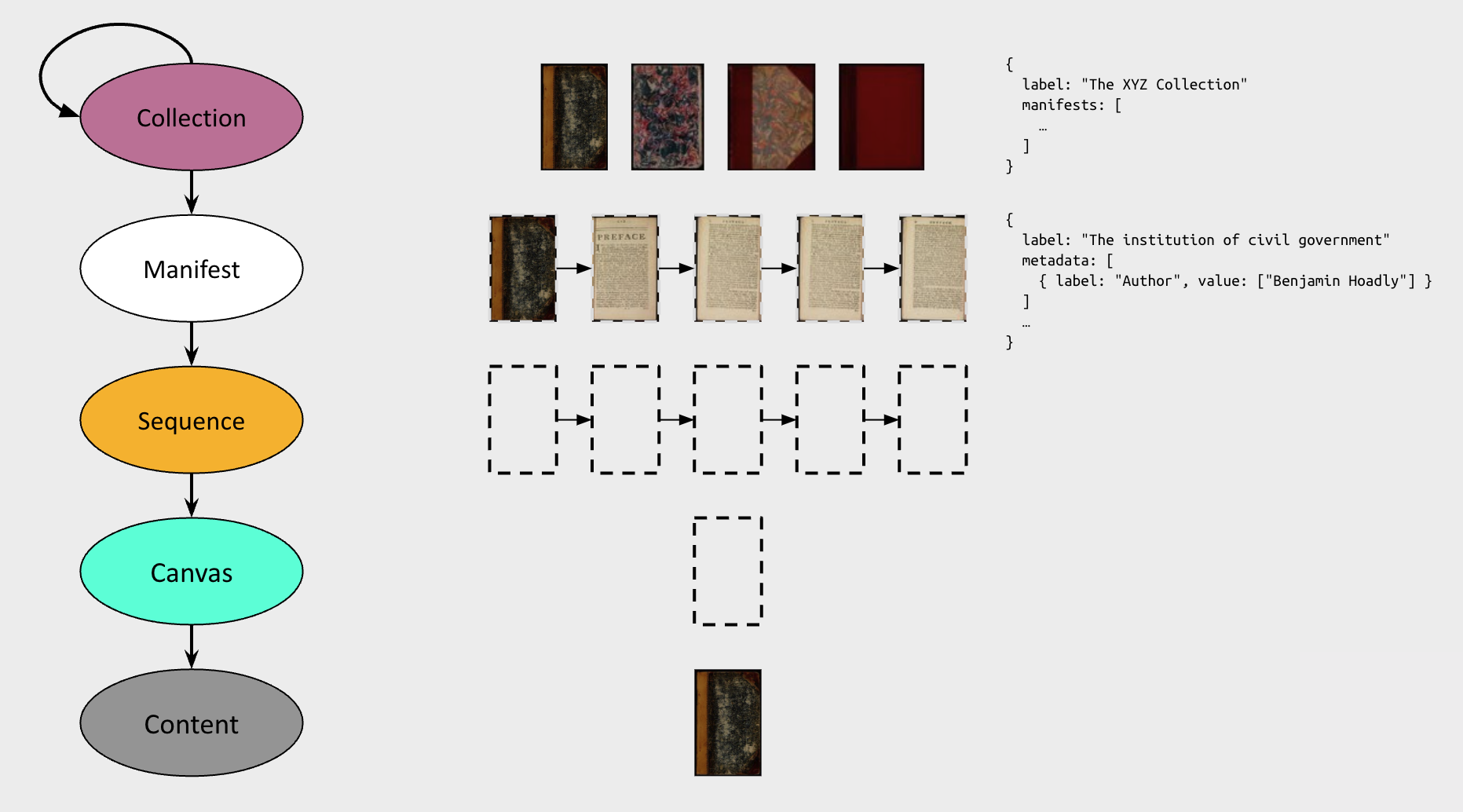

IIIF Presentation API

Presentation API Data Model

Main types

Manifest

{

"@context": "http://iiif.io/api/presentation/3/context.json",

"id": "https://manifests.collections.yale.edu/yuag/obj/293797",

"type": "Manifest",

"label": {

"en": [

"Simon de Vlieger, Fisherfolk and Other Figures on a Beach, 1642"

]

},

"metadata": [

{

"label": {

"en": [

"Copyright Statement"

]

},

"value": {

"en": [

"Public Domain"

]

}

},

{

"label": {

"en": [

"Title"

]

},

"value": {

"en": [

"Fisherfolk and Other Figures on a Beach"

]

}

},

{

"label": {

"en": [

"Creator(s)"

]

},

"value": {

"en": [

"Artist: Simon de Vlieger (Dutch, 1601–1653)"

]

}

},

{

"label": {

"en": [

"Culture"

]

},

"value": {

"en": [

"Dutch"

]

}

},

{

"label": {

"en": [

"Date"

]

},

"value": {

"en": [

"1642"

]

}

},

{

"label": {

"en": [

"Medium"

]

},

"value": {

"en": [

"Oil on panel"

]

}

},

{

"label": {

"en": [

"Dimensions"

]

},

"value": {

"en": [

"32 1/8 × 52 13/16 in. (81.6 × 134.2 cm)"

]

}

},

{

"label": {

"en": [

"Creditline"

]

},

"value": {

"en": [

"Dr. Herbert and Monika Schaefer Fund"

]

}

},

{

"label": {

"en": [

"Classification"

]

},

"value": {

"en": [

"Paintings"

]

}

},

{

"label": {

"en": [

"Department"

]

},

"value": {

"en": [

"European Art"

]

}

},

{

"label": {

"en": [

"Institution"

]

},

"value": {

"en": [

"Yale University Art Gallery"

]

}

},

{

"label": {

"en": [

"Object Number"

]

},

"value": {

"en": [

"2021.16.1"

]

}

}

],

"rights": "https://creativecommons.org/publicdomain/zero/1.0/",

"requiredStatement": {

"label": {

"en": [

"Rights Description"

]

},

"value": {

"en": [

"Data Provided about Yale University Art Gallery collections are public domain. Rights restrictions may apply to cultural works or images of those works."

]

}

},

"logo": [

{

"id": "https://artgallery.yale.edu/sites/default/files/2023-03/LUX_YUAG_logo.png",

"type": "Image"

}

],

"homepage": [

{

"format": "text/html",

"id": "https://artgallery.yale.edu/collections/objects/293797",

"label": {

"en": [

"catalog entry at the Yale University Art Gallery"

]

},

"language": "en",

"type": "Text"

}

],

"items": [

{

"id": "https://manifests.collections.yale.edu/canvas/yuag/ca7804d1-5fbc-4bce-9343-007bcb018c32",

"type": "Canvas",

"label": {

"en": [

"Image from Yale University"

]

},

"height": 5940,

"width": 9833,

"items": [

{

"id": "https://manifests.collections.yale.edu/annopage/yuag/ca7804d1-5fbc-4bce-9343-007bcb018c32",

"type": "AnnotationPage",

"items": [

{

"id": "https://manifests.collections.yale.edu/annotation/yuag/ca7804d1-5fbc-4bce-9343-007bcb018c32",

"type": "Annotation",

"motivation": "painting",

"body": {

"id": "https://images.collections.yale.edu/iiif/2/yuag:ca7804d1-5fbc-4bce-9343-007bcb018c32/full/full/0/default.jpg",

"type": "Image",

"format": "image/jpeg",

"service": [

{

"@id": "https://images.collections.yale.edu/iiif/2/yuag:ca7804d1-5fbc-4bce-9343-007bcb018c32",

"@type": "ImageService2",

"profile": "http://iiif.io/api/image/2/level2.json"

}

],

"height": 5940,

"width": 9833

},

"target": "https://manifests.collections.yale.edu/canvas/yuag/ca7804d1-5fbc-4bce-9343-007bcb018c32"

}

]

}

],

"rendering": [

{

"id": "https://media.collections.yale.edu/tiff/yuag/ca7804d1-5fbc-4bce-9343-007bcb018c32.tif",

"type": "Image",

"label": {

"en": [

"TIFF for download"

]

},

"format": "image/tiff"

}

],

"thumbnail": [

{

"id": "https://media.collections.yale.edu/thumbnail/yuag/ca7804d1-5fbc-4bce-9343-007bcb018c32",

"type": "Image",

"format": "image/jpeg",

"width": 480,

"height": 290

}

],

"metadata": [

{

"label": {

"en": [

"Image Use Rights"

]

},

"value": {

"en": [

"No Copyright - United States"

]

}

}

]

}

]

}Ecosytem

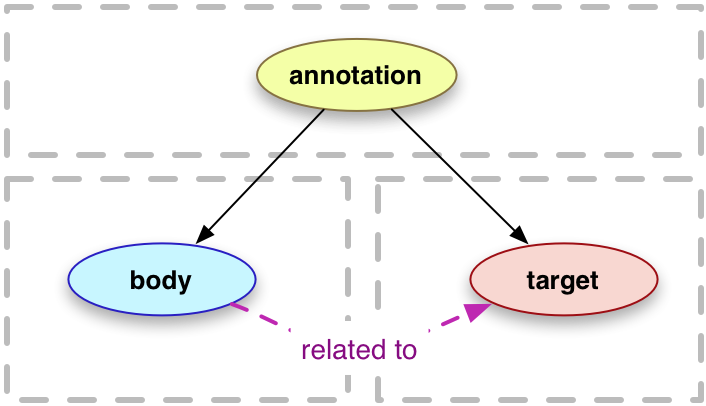

Web Annotation Data Model

The Web Annotation Data Model, standardized by the World Wide Web Consortium (W3C) in 2017, provides an extensible and interoperable framework for creating and sharing annotations across different platforms. It supports a wide range of use cases, from simple annotations to linking content with multimedia data points. It leverages JSON-LD for serialization, enabling integration with the web’s structured data ecosystem while following Linked Data principles (see Haslhofer et al., 2011; Sanderson et al., 2013).

The model follows a three-part structure: - Target: The resource being annotated. - Body: The annotation content (text, image, media, etc.). - Annotation: The entity linking the body and target.

This model ensures compatibility with web architecture and facilitates cross-platform sharing of annotations.

The Web Annotation Data Model in a IIIF setting

{

"@context": "http://iiif.io/api/presentation/3/context.json",

"id": "https://iiif.participatory-archives.ch/annotations/SGV_12N_08589-p1-list.json",

"type": "AnnotationPage",

"items": [

{

"@context": "http://www.w3.org/ns/anno.jsonld",

"id": "https://iiif.participatory-archives.ch/annotations/SGV_12N_08589-p1-list/annotation-436121.json",

"motivation": "commenting",

"type": "Annotation",

"body": [

{

"type": "TextualBody",

"value": "person",

"purpose": "commenting"

},

{

"type": "TextualBody",

"value": "Object Detection (vitrivr)",

"purpose": "tagging"

},

{

"type": "TextualBody",

"value": "<br><small>Detection score: 0.9574</small>",

"purpose": "commenting"

}

],

"target": {

"source": "https://iiif.participatory-archives.ch/SGV_12N_08589/canvas/p1",

"selector": {

"type": "FragmentSelector",

"conformsTo": "http://www.w3.org/TR/media-frags/",

"value": "xywh=319,2942,463,523"

},

"dcterms:isPartOf": {

"type": "Manifest",

"id": "https://iiif.participatory-archives.ch/SGV_12N_08589/manifest.json"

}}},Linked Art

Linked Art is a community and a CIDOC (ICOM International Committee for Documentation) Working Group collaborating to define a metadata application profile for describing cultural heritage, and the technical means for conveniently interacting with it (the API).

Community

Linked Art is an open community initiative where participants collaborate under a shared code of conduct. Ways to engage include attending events, joining the discussion group, participating in the Slack workspace, or contributing to discussions on GitHub.

A wide range of institutions contribute to Linked Art, including The Metropolitan Museum of Art, J. Paul Getty Trust, Yale University, Smithsonian Institution, Europeana, Canadian Heritage Information Network (CHIN), Oxford University, Victoria and Albert Museum, Rijksmuseum, The Frick Collection, Museum of Modern Art (MoMA), and many more. Since 2019, an editorial board has overseen Linked Art, ensuring diverse representation across institutions and disciplines.

Data Model

| Level | Linked Art |

|---|---|

| Conceptual Model | CIDOC Conceptual Reference Model (CRM) |

| Ontology | RDF encoding of CRM 7.1, plus extensions |

| Vocabulary | Getty Vocabularies, mainly the Art & Architecture Thesaurus (AAT), as well as the Thesaurus of Geographic Names (TGN) and the Union List of Artist Names (ULAN) |

| Profile | Object-based cultural heritage (mainly art museum oriented) |

| API | JSON-LD 1.1, following REST (representational state transfer) and web patterns |

Linked Art from 50k feet

Raemy et al. (2023)

Digital Object

{

{

"@context": "https://linked.art/ns/v1/linked-art.json",

"id": "https://linked.art/example/digital/0",

"type": "DigitalObject",

"_label": "Digital Image of Self-Portrait of Van Gogh",

"classified_as": [

{

"id": "http://vocab.getty.edu/aat/300215302",

"type": "Type",

"_label": "Digital Image"

}

],

"identified_by": [

{

"type": "Name",

"content": "Self-Portrait Dedicated to Paul Gauguin"

},

{

"type": "Identifier",

"classified_as": [

{

"id": "http://vocab.getty.edu/aat/300404621",

"type": "Type",

"_label": "Owner-Assigned Number"

}

],

"content": "47174896"

}

],

"dimension": [

{

"type": "Dimension",

"classified_as": [

{

"id": "http://vocab.getty.edu/aat/300055644",

"type": "Type",

"_label": "Height"

}

],

"value": 2550,

"unit": {

"id": "http://vocab.getty.edu/aat/300266190",

"type": "MeasurementUnit",

"_label": "pixels"

}

},

{

"type": "Dimension",

"classified_as": [

{

"id": "http://vocab.getty.edu/aat/300055647",

"type": "Type",

"_label": "Width"

}

],

"value": 2087,

"unit": {

"id": "http://vocab.getty.edu/aat/300266190",

"type": "MeasurementUnit",

"_label": "pixels"

}

}

],

"part_of": [

{

"id": "https://iiif.harvardartmuseums.org/manifests/object/299843",

"type": "DigitalObject",

"_label": "IIIF Manifest"

}

],

"member_of": [

{

"id": "https://linked.art/example/set/0",

"type": "Set",

"_label": "Images of Self-Portraits of Van Gogh"

}

],

"access_point": [

{

"id": "https://ids.lib.harvard.edu/ids/iiif/47174896/full/full/0/default.jpg",

"type": "DigitalObject"

}

],

"digitally_shows": [

{

"type": "VisualItem",

"_label": "Visual Content of Self-Portrait of Van Gogh"

}

],

"format": "image/jpeg",

"digitally_available_via": [

{

"type": "DigitalService",

"_label": "IIIF Service",

"access_point": [

{

"id": "https://ids.lib.harvard.edu/ids/iiif/47174896",

"type": "DigitalObject"

}

],

"conforms_to": [

{

"id": "https://iiif.io/api/image/2/context.json",

"type": "InformationObject"

}

]

}

],

"created_by": {

"type": "Creation",

"carried_out_by": [

{

"id": "https://linked.art/example/group/harvardartmuseums.org",

"type": "Group",

"_label": "Harvard Art Museums"

}

],

"used_specific_object": [

{

"id": "https://harvardartmuseums.org/collections/object/299843",

"type": "HumanMadeObject",

"_label": "Self-Portrait of Van Gogh"

}

]

}

}Linked Art Digital Integration (with IIIF)